bradrn wrote: ↑Fri Nov 10, 2023 11:45 pm

Richard W wrote: ↑Fri Nov 10, 2023 11:32 pm

bradrn wrote: ↑Fri Nov 10, 2023 5:33 pm

The first step is to think about the encoding and whether you want it to work with Window applications. Fortunately (unless you use certain styles of Sanskrit), text on Microsoft Edge is now rendered as though it were not a Windows applications. Unless things have improved recently, for Windows you may have to use a previously encoded script (a 'hack' encoding), whereas puristically you should the Private Use Area (PUA), which is typically lacking in support for graphic ligatures with the Windows rendering. For HarfBuzz applications, unless things have degraded, one can have ligaturing between points in the PUA.

Oh, I don’t think this is Windows-specific. There’s all sorts of special cases with this stuff. Not to mention font hinting…

Thank you both. So, as I understand it, whilst it's not ideal puristically to use a pre-existing code-block like that for Ge'ez or Devanagari, you both think it would be preferable if I did? Given that I'm conpletely unlikely to need to use a Seguwean font to write in either of those scripts.

bradrn wrote: ↑Fri Nov 10, 2023 5:33 pm

Richard W wrote: ↑Fri Nov 10, 2023 11:32 pm

Whether you encode it as a syllabary or an alphasyllabary (compare the Cree syllabary) is up to you. It's not an abugida. It may be simpler to encode it as an alphabet if you plan to use programs to modify text. Now, you may choose to treat the circle used for some of the long(?) diphthongs as a separate character, but if you don't, a font for a PUA encoding would work with Windows applications.

Linguistically speaking this

is an abugida, though that has no particular relevance for encoding.

Ok, I would be quite interested to try to put the question of classifying this system (abugida, syllabary, alphabet) to bed actually, as it's bugging me.

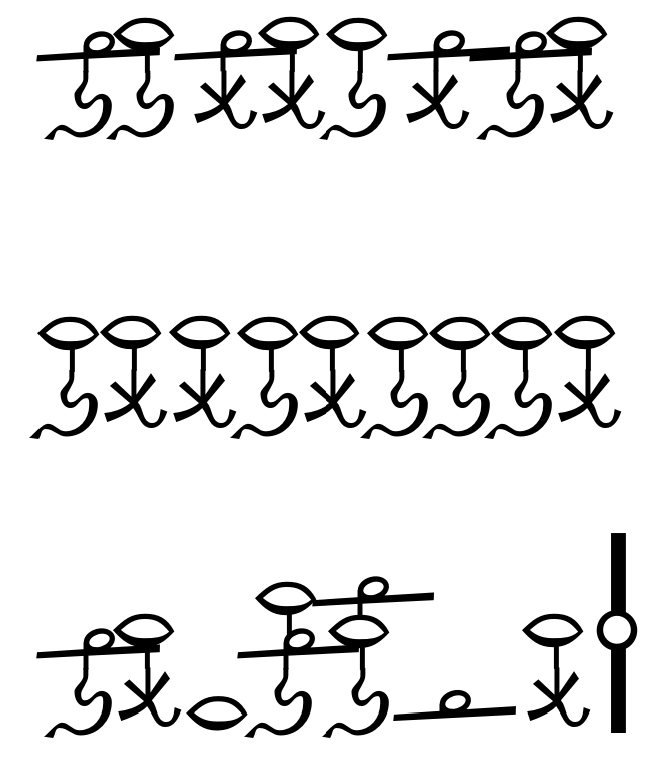

Let's start with the old set, Methakhe-kēu. This was in concept a syllabic system, just in terms of what info was being encoded in each glyph. The only diphthongs in the classical language were /æ͜ɪ/ /ɑ͜ʊ/, represented by ⟨ae⟩ ⟨au⟩, so including the long vowels, each vowel segment had its own ‘body’. One might call it an abugida - except that the consonant segments are clearly not prime, with relatively insignificant adaptations per vowel; if anything, the vowel segments are graphically dominant. One could refer to it as an alphabet or an alphabetic syllabary, like Hangeul.

However, this vowel system was pretty mangled by the time of the Eusebaze reform. Some other VV sequences had diphthongised, some of those diphthongs had monophthongised, and some segments (notably /e/) had devoiced and all but disappeared, leaving a trail of palatalisation, spirantisation and clustering in their wake... And dialectal and register variation was high.

The reformers left actual sound alone; whilst even the Emperor's speech wasn't going to match the clean logic of Eusebaze, that wasn't the point. In fact, syllables were no longer the point. Whilst ⟨kēu⟩ might be heard in Makemura as /cɨː͡ʉ/, in Kemudaru as /çœː/, and in Lesuše as /'ki.lu/ ‒ i.e. with varying weights/moraic structures ‒ there would still be just one ‘body’ used.

Once decoupled from the concept of the syllable, the scribes extended this trait beyond its original utility: now any sequence which had been VV in the classical language, including those that crossed morphemic boundaries, might be written with the appropriate single ‘body’. This had been occasional with ⟨ae au⟩ pre-reform, in shorthand; but the reformers wanted to (a) rebrand the administration with the slightly ostentatious new glyphs, e.g. the shark glyph, (b) make official-style writing slightly

less accessible to the rising merchant classes, and (c) to make their own jobs (writing) quicker/more compact, regardless of the cost for those parsing their texts.

To use one of my previous examples (on the drive), ‘inhabitant of Usage’ is ‘Usage-upu-e’ in the classical language. Pre-reform you would write it as such, with separate glyphs for ⟨ge u pu e⟩, which handily makes each morpheme begin with its own glyph. Post-reform you would write it ⟨u sa geu pue⟩, with two morpheme boundaries embedded inside a ‘diphthong’ glyph. (Non-imperial officials continued to write how they wished, of course, but writing in the Eusebaze style became a marker of prestige.)

The point is, it became a mixed system which could be ‘sub-syllabic’ like Devanagari (where vowel segments had been lost, and syllable codas thus began to take their own glyphs), syllabic, or ‘super-syllabic’ (where adjoining vowel segments were compiled into one glyph). The possibility of combining ⟨-a⟩ glyphs into vertically-stacked sequences of up to 3 syllables (which, in terms of encoding, theoretically adds another 8,000 glyphs to the set

) further erodes the notion that this is a ‘syllabary’.

I think then ultimately it

sort of isn't an abugida or syllabary post-reform. Maybe it's truly an alphabet, with compulsory ligatures on a roughly syllabic scale; but it's disingenuous to describe e.g. the shark glyph as a ligature of ⟨e u⟩ as it has nothing, graphically, in common with either. So I'm still stumped ‒ not that it matters much!

In terms of consequences for encoding – I don't know. I'm grateful for all the pointers re this and sure it will become a bit clearer as I try to do it, but a lot of what you've both said has gone over my head; does anyone know of a ‘make a font (maybe with ligatures)’ guide for

absolute noobs?

Thanks again both!